---------------------------------------------------------------------------------------------------------------------

| Id | Operation | Name | Starts | E-Rows | A-Rows |

---------------------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | | 48 |

| * 1 | HASH JOIN RIGHT OUTER | | 1 | 829K| 48 |

| * 2 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

| * 3 | HASH JOIN OUTER | | 1 | 539K| 48 |

|- 4 | NESTED LOOPS OUTER | | 1 | 539K| 48 |

|- 5 | STATISTICS COLLECTOR | | 1 | | 48 |

| * 6 | HASH JOIN OUTER | | 1 | 350K| 48 |

|- 7 | NESTED LOOPS OUTER | | 1 | 350K| 48 |

|- 8 | STATISTICS COLLECTOR | | 1 | | 48 |

| * 9 | HASH JOIN OUTER | | 1 | 228K| 48 |

|- 10 | NESTED LOOPS OUTER | | 1 | 228K| 48 |

|- 11 | STATISTICS COLLECTOR | | 1 | | 48 |

| * 12 | HASH JOIN OUTER | | 1 | 148K| 48 |

|- 13 | NESTED LOOPS OUTER | | 1 | 148K| 48 |

|- 14 | STATISTICS COLLECTOR | | 1 | | 48 |

| * 15 | HASH JOIN OUTER | | 1 | 96510 | 48 |

|- 16 | NESTED LOOPS OUTER | | 1 | 96510 | 48 |

|- 17 | STATISTICS COLLECTOR | | 1 | | 48 |

| * 18 | HASH JOIN OUTER | | 1 | 62771 | 48 |

|- 19 | NESTED LOOPS OUTER | | 1 | 62771 | 48 |

|- 20 | STATISTICS COLLECTOR | | 1 | | 48 |

|- * 21 | HASH JOIN OUTER | | 1 | 40827 | 48 |

| 22 | NESTED LOOPS OUTER | | 1 | 40827 | 48 |

|- 23 | STATISTICS COLLECTOR | | 1 | | 48 |

|- * 24 | HASH JOIN OUTER | | 1 | 26554 | 48 |

| 25 | NESTED LOOPS OUTER | | 1 | 26554 | 48 |

|- 26 | STATISTICS COLLECTOR | | 1 | | 48 |

|- * 27 | HASH JOIN OUTER | | 1 | 17271 | 48 |

| 28 | NESTED LOOPS OUTER | | 1 | 17271 | 48 |

|- 29 | STATISTICS COLLECTOR | | 1 | | 48 |

|- * 30 | HASH JOIN OUTER | | 1 | 11305 | 48 |

| 31 | NESTED LOOPS OUTER | | 1 | 11305 | 48 |

|- 32 | STATISTICS COLLECTOR | | 1 | | 48 |

| 33 | BATCHED TABLE ACCESS BY INDEX ROWID | TAB | 1 | 9 | 48 |

| * 34 | INDEX RANGE SCAN | FK_TAB_PROP | 1 | 9 | 48 |

| 35 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 48 | 1326 | 48 |

| * 36 | INDEX UNIQUE SCAN | PK_TAB_PROP | 48 | 1 | 48 |

|- * 37 | TABLE ACCESS FULL | TAB_PROP | 0 | 1326 | 0 |

| 38 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 48 | 2 | 48 |

| * 39 | INDEX UNIQUE SCAN | PK_TAB_PROP | 48 | 1 | 48 |

|- * 40 | TABLE ACCESS FULL | TAB_PROP | 0 | 2 | 0 |

| 41 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 48 | 2 | 48 |

| * 42 | INDEX UNIQUE SCAN | PK_TAB_PROP | 48 | 1 | 48 |

|- * 43 | TABLE ACCESS FULL | TAB_PROP | 0 | 2 | 0 |

| 44 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 48 | 2 | 48 |

| * 45 | INDEX UNIQUE SCAN | PK_TAB_PROP | 48 | 1 | 48 |

|- * 46 | TABLE ACCESS FULL | TAB_PROP | 0 | 2 | 0 |

|- 47 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 0 | 2 | 0 |

|- * 48 | INDEX UNIQUE SCAN | PK_TAB_PROP | 0 | | 0 |

| * 49 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

|- 50 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 0 | 2 | 0 |

|- * 51 | INDEX UNIQUE SCAN | PK_TAB_PROP | 0 | | 0 |

| * 52 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

|- 53 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 0 | 2 | 0 |

|- * 54 | INDEX UNIQUE SCAN | PK_TAB_PROP | 0 | | 0 |

| * 55 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

|- 56 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 0 | 2 | 0 |

|- * 57 | INDEX UNIQUE SCAN | PK_TAB_PROP | 0 | | 0 |

| * 58 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

|- 59 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 0 | 2 | 0 |

|- * 60 | INDEX UNIQUE SCAN | PK_TAB_PROP | 0 | | 0 |

| * 61 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

|- 62 | TABLE ACCESS BY INDEX ROWID | TAB_PROP | 0 | 2 | 0 |

|- * 63 | INDEX UNIQUE SCAN | PK_TAB_PROP | 0 | | 0 |

| * 64 | TABLE ACCESS FULL | TAB_PROP | 1 | 2486K| 2486K|

---------------------------------------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

2 - filter("V"."PROP_ID"=84)

3 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

6 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

9 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

12 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

15 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

18 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

21 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

24 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

27 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

30 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID")

34 - access("LI"."TAB_ID"=842300)

36 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=94)

37 - filter("V"."PROP_ID"=94)

39 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=89)

40 - filter("V"."PROP_ID"=89)

42 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=93)

43 - filter("V"."PROP_ID"=93)

45 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=90)

46 - filter("V"."PROP_ID"=90)

48 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=79)

49 - filter("V"."PROP_ID"=79)

51 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=81)

52 - filter("V"."PROP_ID"=81)

54 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=96)

55 - filter("V"."PROP_ID"=96)

57 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=95)

58 - filter("V"."PROP_ID"=95)

60 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=88)

61 - filter("V"."PROP_ID"=88)

63 - access("LI"."TAB_PROP_ID"="V"."TAB_PROP_ID" AND "V"."PROP_ID"=82)

64 - filter("V"."PROP_ID"=82)

Note

-----

- dynamic statistics used: dynamic sampling (level=2)

- this is an adaptive plan (rows marked '-' are inactive)

- 1 Sql Plan Directive used for this statement

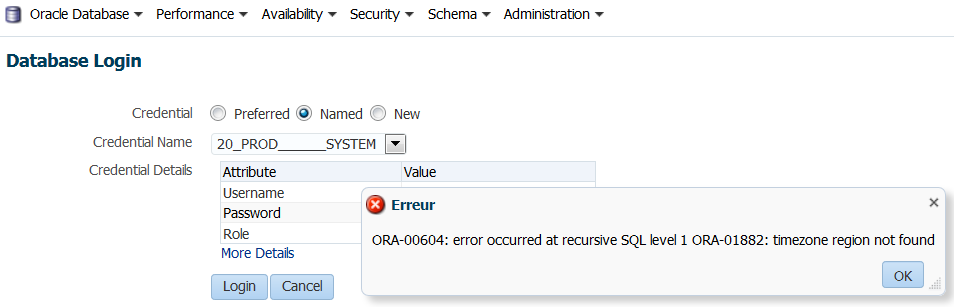

On MOS there are a few notes about the error: ORA-00604: error occurred at recursive SQL level 1 ORA-01882: timezone region not found, the most relevant being Doc ID 1513536.1 and Doc ID 1934470.1.

On MOS there are a few notes about the error: ORA-00604: error occurred at recursive SQL level 1 ORA-01882: timezone region not found, the most relevant being Doc ID 1513536.1 and Doc ID 1934470.1.