If you use Block Change Tracking on your production database and try to duplicate it, you there are good possibilities that you will encounter this error:

|

1 2 3 |

ORA-19755: could not open change tracking file ORA-19750: change tracking file: ‘/u02/oradata/ORCL/ORCL_bct.dbf’ ORA-27037: unable to obtain file status |

The problem is caused by the block change tracking file entry that exists in the target controlfile, but Oracle can’t find the file because the directory structure on the auxiliary server changes.

After the restore and recovery of the auxiliary database, the duplicate process tries to open the DB but the bct file doesn’t exist and the error is thrown.

If you do a quick google search you will find several workarounds:

- disable the block change tracking after you get the error and manually open the auxiliary instance (this prevent the possibility to get the duplicate outcome from the rman return code)

- disable the BCT on the target before running the duplicate (this forces your incremental backups to read all your target database!)

- Richard Harrison proposed another workaround, you can read more about it here.

There is another workaround that I like more (and that you can also find as comment in Richard’s post):

- Disable the Block Change Tracking on the auxiliary while it’s doing the restore/recovery (in mounted status)

(This solutions isn’t coming from me, but as far as I know, the father of this solution is a colleague working at Trivadis.)

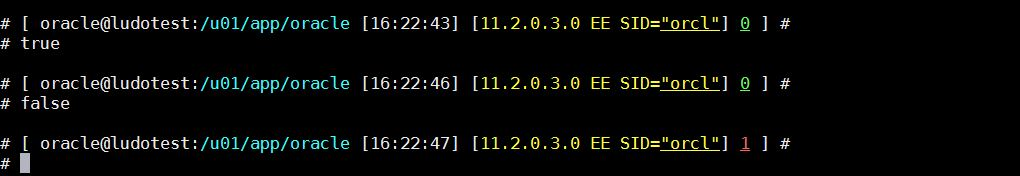

You can easily fork a process before running the duplicate command that:

- loops and checks the auxiliary instance status

- run the disable as soon as the auxiliary is mounted

I’ve worked out this script that does the job:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 |

#!/bin/ksh if [ $# -ne 1 ] ; then echo "Usage: $0 \$ORACLE_SID" exit 1 fi ORACLE_SID=$1 export ORACLE_SID ORACLE_HOME=`cat /etc/oratab | grep ^$ORACLE_SID | awk -F":" '{print $2}'` export ORACLE_HOME disable_trk () { echo "DISABLE BLOCK CHANGE TRACKING" sqlplus -s / as sysdba << EOF set echo on col FILENAME for a30 ALTER DATABASE DISABLE BLOCK CHANGE TRACKING; select * from v\$block_change_tracking; EOF } echo "Checking block change tracking for $ORACLE_SID" PATH=${ORACLE_HOME}/bin:$PATH; export PATH ## for how much time do you want to run the check? INTERVAL=30 MAXCOUNT=30 # loop until the database status is either MOUNTED or OPEN COUNT=1 while [ $COUNT -le $MAXCOUNT ] ; do STATUS=`sqlplus -s / as sysdba <<EOF whenever sqlerror exit SQL.SQLCODE; set head off feed off select status from v\\$instance; EOF ` if [ $? -eq 0 ] ; then if [ $STATUS != "MOUNTED" ] && [ $STATUS != "OPEN" ] ; then echo "$COUNT : Still not mounted" sleep $INTERVAL COUNT=$(($COUNT+1)) continue; else echo echo "If there is an error, BCT is enabled but Oracle can't find the file (ORA-27037)" echo "This is normal, I'll procede with the disable to avoid the error at the end of the duplicate." sqlplus -s / as sysdba <<EOF 2>/dev/null whenever sqlerror exit SQL.SQLCODE; set head off feed off select status from v\$block_change_tracking where status='ENABLED'; EOF # if i get an error, BCT is enabled but Oracle can't find the file (ORA-27037) if [ $? -eq 0 ] ; then echo "BCT already disabled" else echo "Got the error. Let's disable the BCT!" disable_trk fi break; fi else echo "$COUNT : Still not mounted" sleep $INTERVAL COUNT=$(($COUNT+1)) continue; fi done |

Run it just before the duplicate! e.g.

|

1 2 |

/u01/app/oracle/local/bin/disable_trk_dup.sh $ORACLE_SID & rman target=... auxiliary=... |

HTH

—

Ludovico