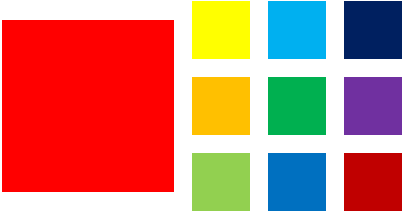

As I’ve written in my previous post, the inmemory_size parameter is static, so you need to restart your instance to activate it or change its size. Let’s try to set it at 600M.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

SQL> show parameter inmem NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ inmemory_clause_default string inmemory_force string DEFAULT inmemory_query string ENABLE inmemory_size big integer 0 SQL> alter system set inmemory_size=600M scope=spfile; SQL> shutdown ... SQL> startup ... SQL> show parameter inmem NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ inmemory_clause_default string inmemory_force string DEFAULT inmemory_query string ENABLE inmemory_size big integer 608M |

First interesting thing: it has been rounded to 608M so it works in chunks of 16M. (to be verified)

Which views can you select for further information?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SQL> select view_name from dba_views where view_name like 'V_$IM%'; VIEW_NAME -------------------------------------------------------------------------------- V_$IM_SEGMENTS_DETAIL V_$IM_SEGMENTS V_$IM_USER_SEGMENTS V_$IM_TBS_EXT_MAP V_$IM_SEG_EXT_MAP V_$IM_HEADER V_$IM_COL_CU V_$IM_SMU_HEAD V_$IM_SMU_CHUNK V_$IM_COLUMN_LEVEL 10 rows selected |

V$IM_SEGMENTS gives a few information about the segments that have a columnar version, including the segment size, the actual memory allocated, the population status and other compression indicators.

The other views help understand the various memory chunks and the status for each column in the segment.

Let’s create a table with a few records:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

SQL> create table ludovico.tinmem as select a.OWNER , a.TABLE_NAME , b.owner owner2, b.table_name table_name2, a.TABLESPACE_NAME , a.STATUS , a.PCT_FREE , a.PCT_USED , a.INI_TRANS , a.MAX_TRANS , a.INITIAL_EXTENT , a.NEXT_EXTENT , a.MIN_EXTENTS , a.MAX_EXTENTS , a.PCT_INCREASE , a.FREELISTS , a.FREELIST_GROUPS , a.LOGGING 22 from all_tables a, all_tables b; Table created. SQL> select count(*) from ludovico.tinmem; COUNT(*) ---------- 5470921 SQL> |

The table is very simple, it’s a cartesian of two “all_tables” views.

Let’s also create an index on it:

|

1 2 3 4 5 6 7 8 |

SQL> create index ludovico.tinmem_ix1 on ludovico.tinmem (table_name, pct_increase); SQL> select segment_name, bytes/1024/1024 from dba_segments where owner='LUDOVICO'; SEGMENT_NAME BYTES/1024/1024 ----------------- --------------- TINMEM 621 TINMEM_IX1 192 |

The table uses 621M and the index 192M.

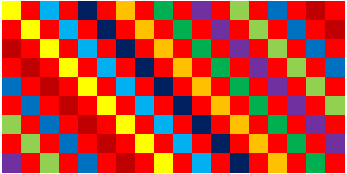

How long does it take to do a full table scan almost from disk?

|

1 2 3 4 5 6 7 8 9 |

SQL> select distinct tablespace_name from ludovico.tinmem order by 1; TABLESPACE_NAME ------------------------------ SYSAUX SYSTEM USERS Elapsed: 00:00:15.05 |

15 seconds! Ok, I’m this virtual machine is on an external drive 5400 RPM… 🙁

Once the table is fully cached in the buffer cache, the query performance progressively improves to ~1 sec.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

SQL> r 1* select distinct tablespace_name from ludovico.tinmem order by 1 TABLESPACE_NAME ------------------------------ SYSAUX SYSTEM USERS Elapsed: 00:00:01.42 SQL> r 1* select distinct tablespace_name from ludovico.tinmem order by 1 TABLESPACE_NAME ------------------------------ SYSAUX SYSTEM USERS Elapsed: 00:00:00.99 |

There is no inmemory segment yet:

|

1 2 3 4 5 |

SQL> SELECT OWNER, SEGMENT_NAME, INMEMORY_PRIORITY, INMEMORY_COMPRESSION 2 FROM V$IM_SEGMENTS; no rows selected |

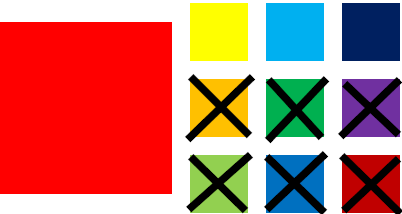

You have to specify it at table level:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SQL> alter table ludovico.tinmem inmemory; Table altered. SQL> SELECT OWNER, SEGMENT_NAME, INMEMORY_PRIORITY, INMEMORY_COMPRESSION FROM V$IM_SEGMENTS; 2 OWNER -------------------------------------------------------------------------------- SEGMENT_NAME -------------------------------------------------------------------------------- INMEMORY INMEMORY_COMPRESS -------- ----------------- LUDOVICO TINMEM HIGH FOR QUERY |

The actual creation of the columnar store takes a while, especially if you don’t specify to create it with high priority. You may have to query the table before seeing the columnar store and its population will also take some time and increase the overall load of the database (on my VBox VM, the performance overhead of columnar store population is NOT negligible).

Once the in-memory store created, the optimizer is ready to use it:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

SQL> explain plan for select distinct tablespace_name from ludovico.tinmem order by 1; Explained. SQL> SELECT PLAN_TABLE_OUTPUT FROM TABLE(DBMS_XPLAN.DISPLAY()); PLAN_TABLE_OUTPUT ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ Plan hash value: 1243998285 -------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 13 | 26132 (2)| 00:00:02 | | 1 | SORT UNIQUE | | 1 | 13 | 25993 (1)| 00:00:02 | | 2 | TABLE ACCESS INMEMORY FULL| TINMEM | 5470K| 67M| 26 (100)| 00:00:01 | -------------------------------------------------------------------------------------- 9 rows selected. |

The previous query now takes half the time on the first attempt!

|

1 2 3 4 5 6 7 8 9 |

SQL> select distinct tablespace_name from ludovico.tinmem order by 1; TABLESPACE_NAME ------------------------------ SYSAUX SYSTEM USERS Elapsed: 00:00:00.50 |

The columnar store for the whole table uses 23M out of 621M, so the compression ratio is very good compared to the non-compressed index previously created!

|

1 2 3 4 5 6 7 8 9 |

SQL> select OWNER, SEGMENT_NAME, SEGMENT_TYPE, INMEMORY_SIZE, BYTES, BYTES_NOT_POPULATED, INMEMORY_PRIORITY, INMEMORY_DISTRIBUTE, INMEMORY_COMPRESSION 2 from V$IM_SEGMENTS; OWNER SEGMENT_NAME SEGMENT_TYPE INMEMORY_SIZE BYTES BYTES_NOT_POPULATED INMEMORY INMEMORY_DISTRI INMEMORY_COMPRESS -------- ------------ ------------ ------------- --------- ------------------- -------- --------------- ----------------- LUDOVICO TINMEM TABLE 23527424 651165696 0 HIGH AUTO DISTRIBUTE FOR QUERY Elapsed: 00:00:00.07 |

This is a very short example. The result here (2x improvement) is influenced by several factors. It is safe to think that with “normal” production conditions the gain will be much higher in almost all the cases.

I just wanted to demonstrate that in-memory columnar store is space efficient and really provides higher speed out of the box.

Now that you know about it, can you live without? 😛