Please see the disclaimer at the end of the post.

Oracle has announced the new Oracle Database Backup Logging Recovery Appliance at the last Open World 2013, but since then it has not been released to the market yet, and very few information is available on the Oracle website.

During the last IOUG Collaborate 14, Oracle master product manager of Data Guard and MAA, Larry Carpenter, has unveiled something more about the DBRLA (call it “Debra” to simplify your life 🙂 ) , and I’ve had the chance to discuss about it directly with Larry.

At Trivadis we think that this appliance will be a game changer in the world of backup management.

Why?

Well, if you have ever worked for a big company with many hundreds of databases, you have certainly encountered many of those common problems:

- Oracle Backup and restore penalized by a shared infrastructure

- Poor backup or restore performance

- Tape drives busy when you need them urgently

- Complex management of backup retentions

That’s not all. As of now, your best recovery point in case of restore is directly related to your backup archive frequency. Oh yes, you have to low down your archive_lag_target parameter, increase your log switch frequency (and thus, the I/O) and still have… 10, 15, 30 minutes of possible data loss? Unless you protect your transactions with a Data Guard. But this will cost you money. For the additional server and storage. For the licenses. And for the effort required to put in place a Data Guard instance for every database that you want to protect. You want to protect your transactions from a storage failure and there’s a price to pay.

The Database Backup Logging Recovery Appliance (wow, I need to copy and paste the name to save time! :-)) overcomes these problems with a simple but brilliant idea: leveraging the existing redo log transport processes and ship the redo stream directly to the backup appliance (the DBLRA, off course) or to its Cloud alter ego, hosted by Oracle.

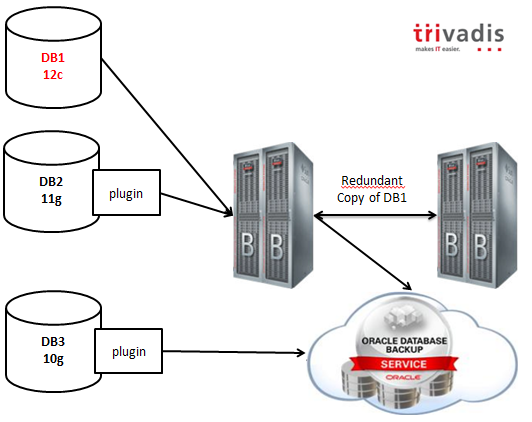

As you can infer from the picture, 12c databases will work natively with the appliance, while previous releases will have a plugin that will enable all the capabilities.

Backups can be mirrored selectively to another DBLRA, or copied to the cloud or to a 3rd party (Virtual) Tape Library.

The backup retention is enforced by the appliance and the expiration and deletion is done automatically using the embedded RMAN catalog.

Lightning fast backups and restores are guaranteed by the hardware: DBLRA is based on the same hardware used by Exadata, with High Capacity disks. Optional storage extensions can be added to increase the capacity, but all the data, as I’ve said, can be offloaded to VTLs in order to use a cheaper storage for older backups.

To resume, the key values are:

- No transaction loss!!

- Lightning fast backups and restores

- Integrated, Oracle engineered, scalable solution for hundreds to thousands of databases

Looking forward to see it in action!

I cannot cover all the information I have in a single post, but Trivadis is working actively to be ready to implement it at the time of the launch to the market (estimated in 2014), so feel free to contact me if you are interested in boosting your backup environment. 😉

By the way, I expect that the competitors (IBM, Microsoft?) will try to develop a solution with the same characteristics in terms of reliability, or they will lose terrain.

Cheers!

Ludovico

Disclaimer: This post is intended to outline Oracle’s general product direction based on the information gathered through public conferences. It is intended for informational purposes only. The development and release of these functionalities and features including the release dates remain at the sole discretion of Oracle and no documentation is available at this time. The features and commands shown may or may not be accurate when the final product release goes GA (General Availability).

Please refer Oracle documentation when it becomes available.