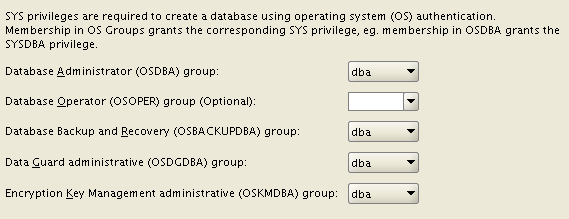

The installation process of a typical Standard Edition RAC does not differ from the Enterprise Edition. To achieve a successful installation refer to the nice quick guide made by Yury Velikanov and change accordingly the Edition when installing the DB software.

Standard Edition and Feature availability

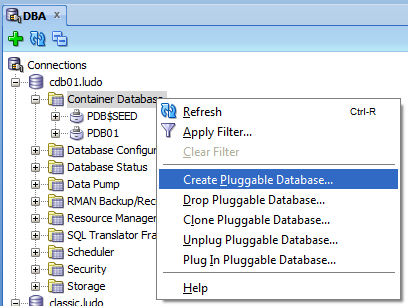

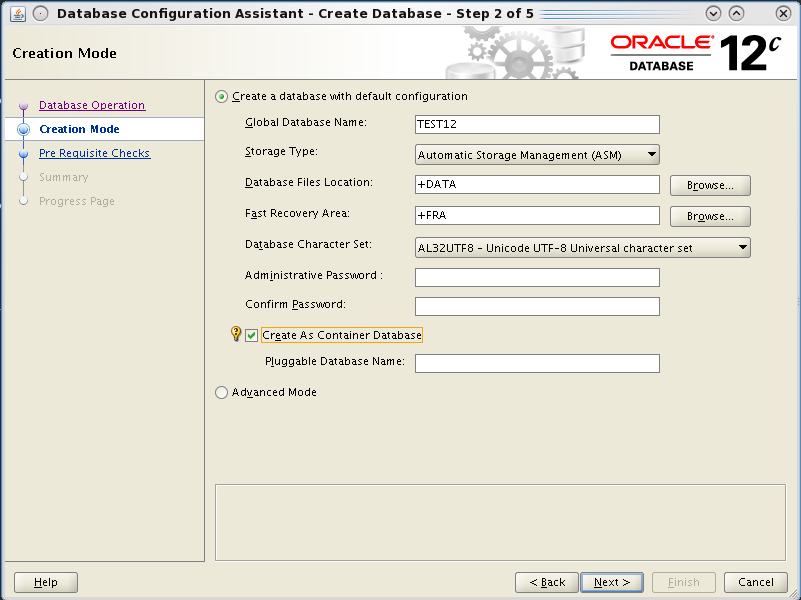

The first thing that impressed me, is that you’re still able to choose to enable pluggable databases in DBCA even if Multitenant option is not available for the SE.

So I decided to create a container database CDB01 using template files, so all options of EE are normally cabled into the new DB. The Pluggable Database name is PDB01.

|

1 2 3 4 5 6 7 8 9 10 11 |

[oracle@se12c01 ~]$ sqlplus SQL*Plus: Release 12.1.0.1.0 Production on Wed Jul 3 14:21:47 2013 Copyright (c) 1982, 2013, Oracle. All rights reserved. Enter user-name: / as sysdba Connected to: Oracle Database 12c Release 12.1.0.1.0 - 64bit Production With the Real Application Clusters and Automatic Storage Management options |

As you can see, the initial banner contains “Real Application Clusters and Automatic Storage Management options“.

Multitenant option is not avilable. How SE reacts to its usage?

First, on the ROOT db, dba_feature_usage_statistics is empty.

|

1 2 3 4 5 6 7 8 9 |

SQL> alter session set container=CDB$ROOT; Session altered. SQL> select * from dba_feature_usage_statistics; no rows selected SQL> |

This is interesting, because all features are in (remember it’s created from the generic template) , so the feature check is moved from the ROOT to the pluggable databases.

On the local PDB I have:

|

1 2 3 4 5 6 7 8 9 |

SQL> alter session set container=PDB01; Session altered. SQL> select * from dba_feature_usage_statistics where lower(name) like '%multitenant%'; NAME VERSION DETECTED_USAGES TOTAL_SAMPLES CURRE ----------------------------- ----------------- --------------- ----- Oracle Multitenant 12.1.0.1.0 0 0 FALSE |

Having ONE PDB is not triggering the usage of Multitenant (as I was expecting).

How if I try to create a new pluggable database?

|

1 2 3 4 5 6 7 8 9 10 11 |

SQL> alter session set container=CDB$ROOT; Session altered. SQL> create pluggable database PDB02 admin user pdb02admin identified by pdb02admin; create pluggable database PDB02 admin user pdb02admin identified by pdb02admin * ERROR at line 1: ORA-65010: maximum number of pluggable databases created SQL> |

A-AH!! Correctly, I can have a maximum of ONE pluggable database in my container.

This allows however:

- Smooth migration from SE to a Multitenant Architecture

- Quick upgrade from one release to another

To be sure that I can plug/unplug, I’ve tried it:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

SQL> alter pluggable database pdb01 close immediate; Pluggable database altered. SQL> alter pluggable database pdb01 unplug into '/u01/app/oracle/unplugged/pdb01/pdb01.xml'; Pluggable database altered. SQL> drop pluggable database pdb01 keep datafiles; Pluggable database dropped. SQL> create pluggable database PDB02 admin user pdb02admin identified by pdb02admin; Pluggable database created. SQL> alter pluggable database pdb02 open; Pluggable database altered. SQL> select * from dba_feature_usage_statistics where name like '%Multite%'; NAME VERSION DETECTED_USAGES TOTAL_SAMPLES CURRE ----------------------------- ----------------- --------------- ----- Oracle Multitenant 12.1.0.1.0 0 0 FALSE |

Other features of Enterprise off course don’t work

|

1 2 3 4 5 6 7 8 9 10 11 |

SQL> alter index SYS_C009851 rebuild online tablespace users; alter index SYS_C009851 rebuild online tablespace users * ERROR at line 1: ORA-00439: feature not enabled: Online Index Build SQL> alter database move datafile 'DATA/CDB01/E09CA0E26A726D60E043A138A8C0E475/DATAFILE/users.284.819821651'; alter database move datafile 'DATA/CDB01/E09CA0E26A726D60E043A138A8C0E475/DATAFILE/users.284.819821651' * ERROR at line 1: ORA-00439: feature not enabled: online move datafile |

Create a Service on the RAC Standard Edition (just to check if it works)

I’ve just followed the steps to do it on an EE. Keep in mind that I’m using admin managed DB (something will come about policy managed DBs, stay tuned).

As you can see it works pretty well. Comparing to 11g you have to specify the -pdb parameter:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

[oracle@se12c01 admin]$ srvctl add service -db CDB01 -service testpdb02 -preferred CDB012 -pdb PDB02 [oracle@se12c01 admin]$ srvctl start service -db cdb01 -s testpdb02 [oracle@se12c01 admin]$ crsctl stat res -t -------------------------------------------------------------------------------- Name Target State Server State details -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.DATA.dg ONLINE ONLINE se12c01 STABLE ONLINE ONLINE se12c02 STABLE ora.LISTENER.lsnr ONLINE ONLINE se12c01 STABLE ONLINE ONLINE se12c02 STABLE ora.asm ONLINE ONLINE se12c01 Started,STABLE ONLINE ONLINE se12c02 Started,STABLE ora.net1.network ONLINE ONLINE se12c01 STABLE ONLINE ONLINE se12c02 STABLE ora.ons ONLINE ONLINE se12c01 STABLE ONLINE ONLINE se12c02 STABLE -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE se12c01 STABLE ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE se12c02 STABLE ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE se12c02 STABLE ora.cdb01.db 1 ONLINE ONLINE se12c01 Open,STABLE 2 ONLINE ONLINE se12c02 Open,STABLE ora.cdb01.testpdb02.svc <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<< 1 ONLINE ONLINE se12c02 STABLE ora.cvu 1 ONLINE ONLINE se12c02 STABLE ora.oc4j 1 OFFLINE OFFLINE STABLE ora.scan1.vip 1 ONLINE ONLINE se12c01 STABLE ora.scan2.vip 1 ONLINE ONLINE se12c02 STABLE ora.scan3.vip 1 ONLINE ONLINE se12c02 STABLE ora.se12c01.vip 1 ONLINE ONLINE se12c01 STABLE ora.se12c02.vip 1 ONLINE ONLINE se12c02 STABLE -------------------------------------------------------------------------------- |

Then I can access my DB (and preferred instance) using the service_name I specified.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

[oracle@se12c02 admin]$ $ORACLE_HOME/bin/sqlplus pdb02admin/pdb02admin@testpdb02 SQL*Plus: Release 12.1.0.1.0 Production on Wed Jul 3 16:46:06 2013 Copyright (c) 1982, 2013, Oracle. All rights reserved. Last Successful login time: Wed Jul 03 2013 16:46:01 +02:00 Connected to: Oracle Database 12c Release 12.1.0.1.0 - 64bit Production With the Real Application Clusters and Automatic Storage Management options SQL> show con_name CON_NAME ------------------------------ PDB02 SQL> |

Let me know what do you think about SE RAC on 12c. It is valuable for you?

I’m also on twitter: @ludovicocaldara

Cheers

—

Ludo