Some weeks ago I’ve commented a good post of Martin Bach (@MartinDBA on Twitter, make sure to follow him!)

What I’ve realized by is that Policy Managed Databases are not widely used and there is a lot misunderstanding on how it works and some concerns about implementing it in production.

My current employer Trivadis (@Trivadis, make sure to call us if your database needs a health check :-)) use PMDs as best practice, so it’s worth to spend some words on it. Isn’t it?

Why Policy Managed Databases?

PMDs are an efficient way to manage and consolidate several databases and services with the least effort. They rely on Server Pools. Server pools are used to partition physically a big cluster into smaller groups of servers (Server Pool). Each pool have three main properties:

- A minumim number of servers required to compose the group

- A maximum number of servers

- A priority that make a server pool more important than others

If the cluster loses a server, the following rules apply:

- If a pool has less than min servers, a server is moved from a pool that has more than min servers, starting with the one with lowest priority.

- If a pool has less than min servers and no other pools have more than min servers, the server is moved from the server with the lowest priority.

- Poolss with higher priority may give servers to pools with lower priority if the min server property is honored.

This means that if a serverpool has the greatest priority, all other server pools can be reduced to satisfy the number of min servers.

Generally speaking, when creating a policy managed database (can be existent off course!) it is assigned to a server pool rather than a single server. The pool is seen as an abstract resource where you can put workload on.

If you read the definition of Cloud Computing given by the NIST (http://csrc.nist.gov/publications/nistpubs/800-145/SP800-145.pdf) you’ll find something similar:

Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared

pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that

can be rapidly provisioned and released with minimal management effort or service provider interaction

There are some major benefits in using policy managed databases (that’s my solely opinion):

- PMD instances are created/removed automatically. This means that you can add and remove nodes nodes to/from the server pools or the whole cluster, the underlying databases will be expanded or shrinked following the new topology.

- Server Pools (that are the base for PMDs) allow to give different priorities to different groups of servers. This means that if correctly configured, you can loose several physical nodes without impacting your most critical applications and without reconfiguring the instances.

- PMD are the base for Quality of Service management, a 11gR2 feature that does resource management cluster-wide to achieve predictable performances on critical applications/transactions. QOS is a really advanced topic so I warn you: do not use it without appropriate knowledge. Again, Trivadis has deep knowledge on it so you may want to contact us for a consulting service (and why not, perhaps I’ll try to blog about it in the future).

- RAC One Node databases (RONDs?) can work beside PMDs to avoid instance proliferation for non critical applications.

- Oracle is pushing it to achieve maximum flexibility for the Cloud, so it’s a trendy technology that’s cool to implement!

- I’ll find some other reasons, for sure! 🙂

What changes in real-life DB administration?

Well, the concept of having a relation Server -> Instance disappears, so at the very beginning you’ll have to be prepared to something dynamic (but once configured, things don’t change often).

As Martin pointed out in his blog, you’ll need to configure server pools and think about pools of resources rather than individual configuration items.

The spfile doesn’t contain any information related to specific instances, so the parameters must be database-wide.

The oratab will contain only the dbname, not the instance name, and the dbname is present in oratab disregarding if the server belongs to a serverpool or another.

|

1 2 3 |

+ASM1:/oracle/grid/11.2.0.3:N # line added by Agent PMU:/oracle/db/11.2.0.3:N # line added by Agent TST:/oracle/db/11.2.0.3:N # line added by Agent |

Your scripts should take care of this.

Also, when connecting to your database, you should rely on services and access your database remotely rather than trying to figure out where the instances are running. But if you really need it you can get it:

|

1 2 3 4 5 6 7 |

# srvctl status database -d PMU Instance PMU_4 is running on node node2 Instance PMU_2 is running on node node3 Instance PMU_3 is running on node node4 Instance PMU_5 is running on node node6 Instance PMU_1 is running on node node7 Instance PMU_6 is running on node node8 |

An approach for the crontab: every DBA soon or late will need to schedule tasks within the crond. Since the RAC have multiple nodes, you don’t want to run the same script many times but rather choose which node will execute it.

My personal approach (every DBA has his personal preference) is to check the instance with cardinality 1 and match it with the current node. e.g.:

|

1 2 3 4 5 6 7 |

# [ `crsctl stat res ora.tst.db -k 1 | grep STATE=ONLINE | awk '{print $NF}'` == `uname -n` ] # echo $? 0 # [ `crsctl stat res ora.tst.db -k 1 | grep STATE=ONLINE | awk '{print $NF}'` == `uname -n` ] # echo $? 1 |

In the example, TST_1 is running on node1, so the first evaluation returns TRUE. The second evaluation is done after the node2, so it returns FALSE.

This trick can be used to have an identical crontab on every server and choose at the runtime if the local server is the preferred to run tasks for the specified database.

A proof of concept with Policy Managed Databases

My good colleague Jacques Kostic has given me the access to a enterprise-grade private lab so I can show you some “live operations”.

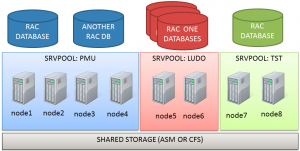

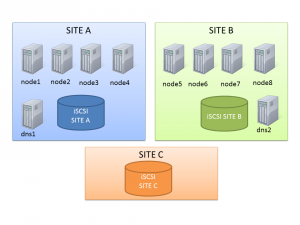

Let’s start with the actual topology: it’s an 8-node stretched RAC with ASM diskgroups with failgroups on the remote site.

This should be enough to show you some capabilities of server pools.

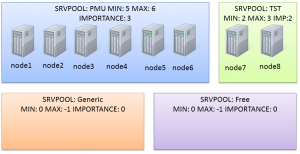

The Generic and Free server pools

After a clean installation, you’ll end up with two default server pools:

The Generic one will contain all non-PMDs (if you use only PMDs it will be empty). The Free one will own servers that are “spare”, when all server pools have reached the maximum size thus they’re not requiring more servers.

New server pools

Actually the cluster I’m working on has two serverpools already defined (PMU and TST):

(the node assignment in the graphic is not relevant here).

They have been created with a command like this one:

|

1 |

# srvctl add serverpool -g PMU -l 5 -u 6 -i 3 |

|

1 |

# srvctl add serverpool -g TST -l 2 -u 3 -i 2 |

“srvctl -h ” is a good starting point to have a quick reference of the syntax.

You can check the status with:

|

1 2 3 4 5 6 7 8 9 |

# srvctl status serverpool Server pool name: Free Active servers count: 0 Server pool name: Generic Active servers count: 0 Server pool name: PMU Active servers count: 6 Server pool name: TST Active servers count: 2 |

and the configuration:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# srvctl config serverpool Server pool name: Free Importance: 0, Min: 0, Max: -1 Candidate server names: Server pool name: Generic Importance: 0, Min: 0, Max: -1 Candidate server names: Server pool name: PMU Importance: 3, Min: 5, Max: 6 Candidate server names: Server pool name: TST Importance: 2, Min: 2, Max: 3 Candidate server names: |

Modifying the configuration of serverpools

In this scenario, PMU is too big. The sum of minumum nodes is 2+5=7 nodes, so I have only one server that can be used for another server pool without falling below the minimum number of nodes.

I want to make some room to make another server pool composed of two or three nodes, so I reduce the serverpool PMU:

|

1 |

# srvctl modify serverpool -g PMU -l 3 |

Notice that PMU maxsize is still 6, so I don’t have free servers yet.

|

1 2 3 4 5 6 7 |

# srvctl status database -d PMU Instance PMU_4 is running on node node2 Instance PMU_2 is running on node node3 Instance PMU_3 is running on node node4 Instance PMU_5 is running on node node6 Instance PMU_1 is running on node node7 Instance PMU_6 is running on node node8 |

So, if I try to create another serverpool I’m warned that some resources can be taken offline:

|

1 2 3 4 5 6 |

# srvctl add serverpool -g LUDO -l 2 -u 3 -i 1 PRCS-1009 : Failed to create server pool LUDO PRCR-1071 : Failed to register or update server pool ora.LUDO CRS-2736: The operation requires stopping resource 'ora.pmu.db' on server 'node8' CRS-2736: The operation requires stopping resource 'ora.pmu.db' on server 'node3' CRS-2737: Unable to register server pool 'ora.LUDO' as this will affect running resources, but the force option was not specified |

The clusterware proposes to stop 2 instances from the db pmu on the serverpool PMU because it can reduce from 6 to 3, but I have to confirm the operation with the flag -f.

Modifying the serverpool layout can take time if resources have to be started/stopped.

|

1 2 3 4 5 6 7 8 9 10 11 |

# srvctl status serverpool Server pool name: Free Active servers count: 0 Server pool name: Generic Active servers count: 0 Server pool name: LUDO Active servers count: 2 Server pool name: PMU Active servers count: 4 Server pool name: TST Active servers count: 2 |

My new serverpool is finally composed by two nodes only, because I’ve set an importance of 1 (PMU wins as it has an importance of 3).

Inviting RAC One Node databases to the party

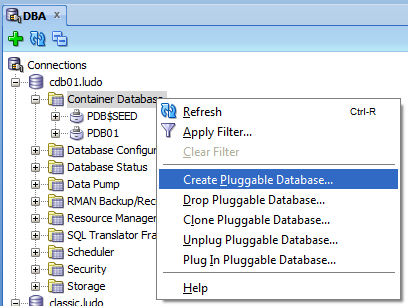

Now that I have some room on my new serverpool, I can start creating new databases.

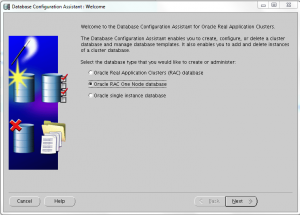

With PMD I can add two types of databases: RAC or RACONDENODE. Depending on the choice, I’ll have a database running on ALL NODES OF THE SERVER POOL or on ONE NODE ONLY. This is a kind of limitation in my opinion, hope Oracle will improve it in the near future: would be great to specify the cardinality also at database level.

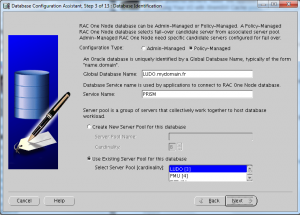

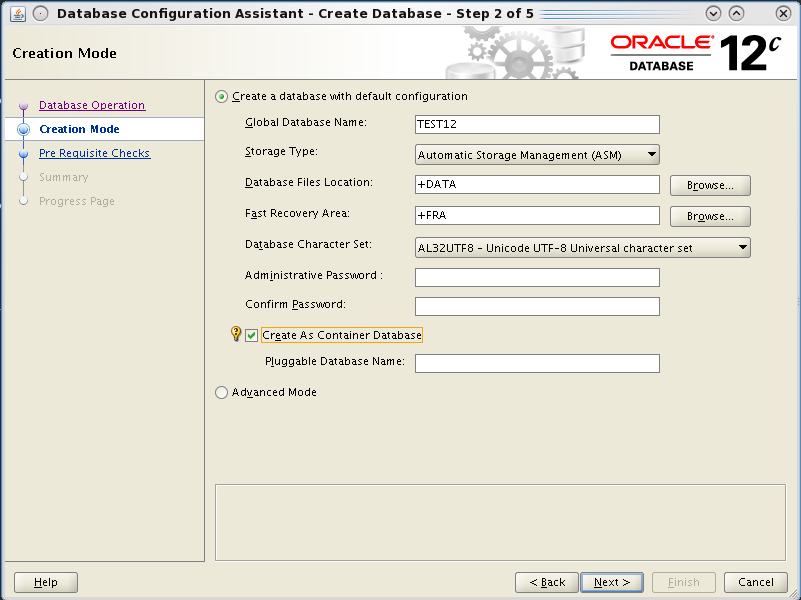

Creating a RAC One DB is as simple as selecting two radio box during in the dbca “standard” procedure:

The Server Pool can be created or you can specify an existent one (as in this lab):

I’ve created two new RAC One Node databases:

- DB LUDO (service PRISM :-))

- DB VICO (service CHEERS)

I’ve ended up with something like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

-------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- ora.ludo.db <<<<< RAC ONE 1 ONLINE ONLINE node8 Open ora.ludo.prism.svc 1 ONLINE ONLINE node8 ora.pmu.db 1 ONLINE ONLINE node7 Open 2 ONLINE ONLINE node4 Open 3 ONLINE ONLINE node5 Open 4 ONLINE ONLINE node6 Open ora.tst.db 1 ONLINE ONLINE node1 Open 2 ONLINE ONLINE node2 Open ora.vico.cheers.svc 1 ONLINE ONLINE node3 ora.vico.db <<<< RAC ONE 1 ONLINE ONLINE node3 Open |

That can be represented with this picture:

RAC One Node databases can be managed as always with online relocation (it’s still called O-Motion?)

Losing the nodes

With this situation, what happens if I loose (stop) one node?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

# crsctl stop cluster -n node8 CRS-2673: Attempting to stop 'ora.crsd' on 'node8' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'node8' CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'node8' CRS-2673: Attempting to stop 'ora.ludo.prism.svc' on 'node8' CRS-2677: Stop of 'ora.ludo.prism.svc' on 'node8' succeeded CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.node8.vip' on 'node8' CRS-2677: Stop of 'ora.node8.vip' on 'node8' succeeded CRS-2672: Attempting to start 'ora.node8.vip' on 'node4' CRS-2676: Start of 'ora.node8.vip' on 'node4' succeeded CRS-2673: Attempting to stop 'ora.ludo.db' on 'node8' CRS-2677: Stop of 'ora.ludo.db' on 'node8' succeeded CRS-2672: Attempting to start 'ora.ludo.db' on 'node3' CRS-2676: Start of 'ora.ludo.db' on 'node3' succeeded CRS-2672: Attempting to start 'ora.ludo.prism.svc' on 'node3' CRS-2676: Start of 'ora.ludo.prism.svc' on 'node3' succeeded CRS-2673: Attempting to stop 'ora.GRID.dg' on 'node8' CRS-2673: Attempting to stop 'ora.DATA.dg' on 'node8' CRS-2673: Attempting to stop 'ora.FRA.dg' on 'node8' CRS-2673: Attempting to stop 'ora.RECO.dg' on 'node8' CRS-2677: Stop of 'ora.DATA.dg' on 'node8' succeeded CRS-2677: Stop of 'ora.FRA.dg' on 'node8' succeeded CRS-2677: Stop of 'ora.RECO.dg' on 'node8' succeeded CRS-2677: Stop of 'ora.GRID.dg' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'node8' CRS-2677: Stop of 'ora.asm' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.ons' on 'node8' CRS-2677: Stop of 'ora.ons' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.net1.network' on 'node8' CRS-2677: Stop of 'ora.net1.network' on 'node8' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'node8' has completed CRS-2677: Stop of 'ora.crsd' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.ctssd' on 'node8' CRS-2673: Attempting to stop 'ora.evmd' on 'node8' CRS-2673: Attempting to stop 'ora.asm' on 'node8' CRS-2677: Stop of 'ora.evmd' on 'node8' succeeded CRS-2677: Stop of 'ora.asm' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'node8' CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'node8' succeeded CRS-2677: Stop of 'ora.ctssd' on 'node8' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'node8' CRS-2677: Stop of 'ora.cssd' on 'node8' succeeded |

The node was belonging to the pool LUDO, however I have this situation right after:

|

1 2 3 4 5 6 7 8 9 10 11 |

# srvctl status serverpool Server pool name: Free Active servers count: 0 Server pool name: Generic Active servers count: 0 Server pool name: LUDO Active servers count: 2 Server pool name: PMU Active servers count: 3 Server pool name: TST Active servers count: 2 |

A server has been taken from the pol PMU and given to the pool LUDO. This is because PMU was having one more server than his minimum server requirement.

Now I can loose one node at time, I’ll have the following situation:

- 1 node lost: PMU 3, TST 2, LUDO 2

- 2 nodes lost: PMU 3, TST 2, LUDO 1 (as PMU is already on min and has higher priority, LUDO is penalized because has the lowest priority)

- 3 nodes lost:PMU 3, TST 2, LUDO 0 (as LUDO has the lowest priority)

- 4 nodes lost: PMU 3, TST 1, LUDO 0

- 5 nodes lost: PMU 3, TST 0, LUDO 0

So, my hyper-super-critical application will still have three nodes to have plenty of resources to run even with a multiple physical failure, as it is the server pool with the highest priority and a minimum required server number of 3.

What I would ask to Santa if I’ll be on the Nice List (ad if Santa works at Redwood Shores)

Dear Santa, I would like:

- To create databases with node cardinality, to have for example 2 instances in a 3 nodes server pool

- Server Pools that are aware of the physical location when I use stretched clusters, so I could end up always with “at least one active instance per site”.

Think about it 😉

—

Ludovico