(*) Multiple times in this blog post I refer to a problem with new Oracle Home installs and rollback scripts. The problem has been fixed with PSU Jan 2017, I did not notice it before, sorry. Thanks to Martin Berger for the information

Let’s see some common approaches to Oracle Home patching.

First, how patches are applied

No, I will not talk about how to use opatch 🙂 It is an overview of the “high-level” methods… when you have multiple servers and (eventually) multiple databases per server.

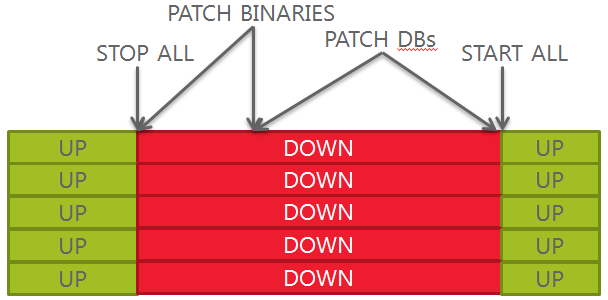

Worst approach (big bang)

1.Stop everything

2.In-place binaries patching

3.Database patching, “big bang” mode

4.Start everything

With this approach, you have a big downtime, a maintenance window hard to get (all applications are down at the same time), no control over a single database and no easy rollback in case your binaries get compromised/corrupted by the patch apply.

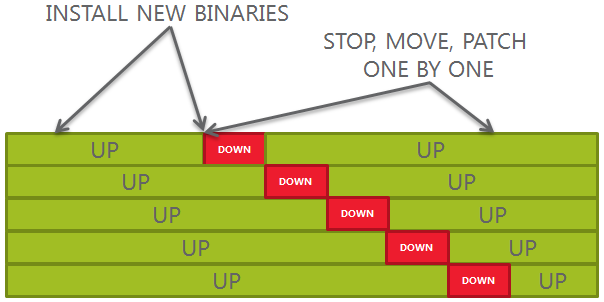

Another bad approach (new install and out-of-place patching)

1.Re-install binaries manually in a new path

2.Patch the new binaries

3.Stop, change OH, patch databases one by one

4.Decommission old binaries

This approach is much better than the previous one, but still has some pitfalls:

This approach is much better than the previous one, but still has some pitfalls:

- If you have many servers and environments: doing it frequently might be a challenge

- Rollback scripts are not copied automatically: the datapatch will fail unless you copy them by hand (*)

- New installs introduce potential human error, unless you use unattended install with your own scripts

- Do you like to run opatch apply all the time, after all?

Better approach (software cloning)

This approach is very close to the previous one, with the exception that the new Oracle Home is not installed from scratch, but rather cloned from an existing one. This way, the rollback scripts used by the datapatch binary will be there and there will be no errors when patching the databases. (*)

The procedure for Oracle Home cloning is described in the Oracle Documentation, here.

Another cool thing is that you can clone Oracle Homes across different nodes, so that you might have the same patch level everywhere without repeating the tedious tasks of upgrading the opatch, patching the binaries, etc. etc.

But still, you have to identify which Oracle Home you have to clone and keep track of the latest version.

Best approach (Golden Images)

The best approach would consist in having a central repository for your software, where you store every version of your Oracle Homes, one for each patch level.

Having a central repository allows to install the software ONCE and use a “clone, patch and store it” strategy. You can, for example, use only one server to do all the patching and then distribute your software images to the different database servers.

This is the concept of Golden Images used by Rapid Home Provisioning that will be in the scope of my next blog post.

Second, which patches are applied

Now that we have seen some Oracle Home patching approaches, is it worth to know which patches are important in a patching strategy.

It is better that you get used to the differences between PSU/BP and RU/RUR, by reading this valuable post from Mike Dietrich:

https://mikedietrichde.com/2017/10/24/differences-psu-bp-ru-rur/

I will make the assumption that in every case, the critical patches should be applied quarterly, or at least once per year, in order to fix security bugs.

The conservative approach (stability and performance over improvements)

Prior to 12.2, in order to guarantee security and stability, the best approach was to apply only PSUs each quarter.

From 12.2, the most conservative approach is to apply the latest Release Update Review on top of the oldest as possible Release Update. Confusing? Things will be clearer when I’ll write about the 18c New Release Model in a few days…

The cowboy approach (improvements over stability and performance)

Sometimes Bundle Patches and Release Updates contain cool backports from the new releases; sometimes they contain just more bug fixes than the PSUs and RURs; sometimes they fix important stuff like disabling bad transformations that lead to wrong result bugs or other annoying bugs.

Personally, I prefer to include such improvements in my patching strategy: I regularly apply RU for releases >=12.2 and BP for releases <=12.1. Don’t call me cowboy, however 🙂

The incumbent approach (or why you cannot avoid one-offs)

It does not matter your patch frequency: sometimes you hit a bug, and the only solution is either to apply the one-off patch or the workaround, if available.

If you apply the one-off patch for a specific bug, from an Oracle Home maintenance point of view, it would be better to

- apply the same one-off everywhere (read, all your Oracle Homes with the very same release), this makes your environment homogeneous.

or

- use a clone of the Oracle Home with the one-off as basis to apply the release update and distribute it to the other servers.

Why?

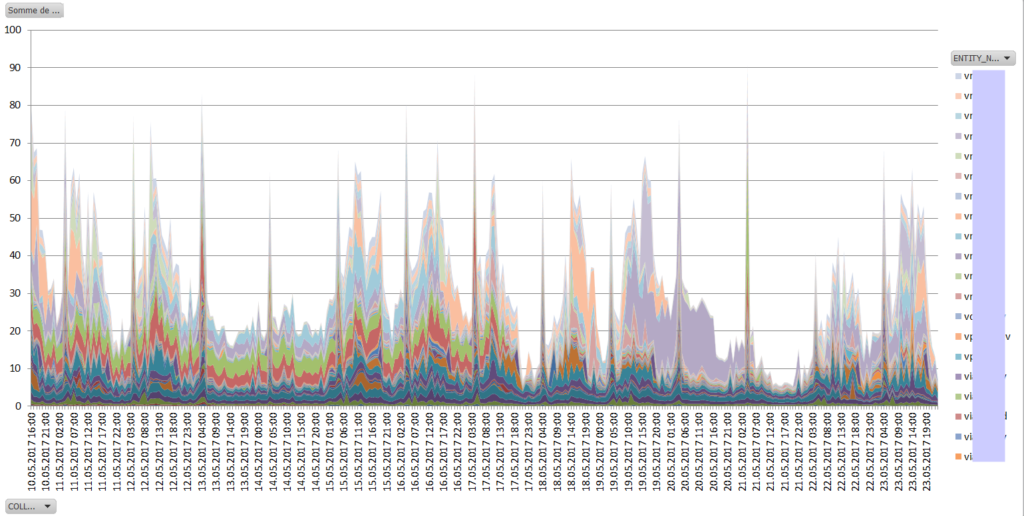

Again, it is a problem with rollback scripts (*), with patch conflicts and also, of number of versions to maintain:![2018-05-03 16_26_38-Diaporama PowerPoint - [Présentation1]](https://www.ludovicocaldara.net/dba/wp-content/uploads/2018/05/2018-05-03-16_26_38-Diaporama-PowerPoint-Présentation1-1024x622.png) Less paths, less error-prone!

Less paths, less error-prone!

There is, however, the alternative to one-offs: implementing the workaround instead of applying the patch. Most of the time the workaround consist in disabling “something” through parameters, or worse, hidden parameters (the underscore parameters that the support says you should not set, but advise to do all the time as workaround :-))

It might be a good idea to use the workaround instead of apply tha patch if you already know that the bug will be fixed in the next Release Update (for example), or that the workaround is so easy to implement that it is not worth to create another version of Oracle Home that will require special attention at the next quarter.

If you apply workarounds, anyway, be sure that you comment EXACTLY why, when and who, so you can decide to unset it at the next parameter review or maintenance… e.g.

|

1 2 3 4 5 |

alter system set "_px_groupby_pushdown"=off comment='Ludo, 03.05.16: W/A for bug 18499088' scope=both sid='*'; alter system set "_fix_control"='14033181:0','11843466:off','26664361:7','16732417:1','20243268:1' comment='Ludo, 20.11.17: fixes of BP171017 + W/A bugs 21303294 24499054' scope=spfile sid='*'; |

Makes sense?