I wish I had more time to blog in the recent weeks. Sorry for the delay in this blog series 🙂

If you have not read the two previous blog posts, please do it now. I suppose here that you have the Independent Local-Mode Automaton already enabled.

What does the Independent Local-mode Automaton?

The automaton automates the process of moving the active Grid Infrastructure Oracle Home from the current one to a new one. The new one can be either at a higher patch level or at a lower one. Of course, you will probably want to patch your grid infrastructure, going then to a higher level of patching.

Preparing the new Grid Infrastructure Oracle Home

The GI home, starting from 12.2, is just a zip that is extracted directly in the new Oracle Home. In this blog post I suppose that you want to patch your Grid Infrastructure from an existing 18.3 to a brand new 18.4 (18.5 will be released very soon).

So, if your current OH is /u01/app/grid/crs1830, you might want to prepare the new home in /u01/app/grid/crs1840 by unzipping the software and then patching using the steps described here.

If you already have a golden image with the correct version, you can unzip it directly.

Beware of four important things:

- You have to register the new Oracle home in the Central Inventory using the SW_ONLY install, as described here.

- You must do it for all the nodes in the cluster prior to upgrading

- The response file must contain the same groups (DBA, OPER, etc) as the current active Home, otherwise errors will appear.

- You must relink by hand your Oracle binaries with the RAC option:

$ cd /u01/app/grid/1crs1840/rdbms/lib

$ make -f ins_rdbms.mk rac_on ioracle

In fact, after every attach to the central inventory the binaries are relinked without RAC option, so it is important to activate RAC again to avoid bad problems when upgrading the ASM with the new Automaton.

Executing the move gihome

If everything is correct, you should have now the current and new Oracle Homes, correctly registered in the Central Inventory, with the RAC option activated.

You can now do a first eval to check if everything looks good:

|

1 2 3 4 5 6 7 8 |

# [ oracle@server1:/u01/app/oracle/home [12:01:52] [18.3.0.0.0 [GRID] SID=GRID] 0 ] # $ rhpctl move gihome -sourcehome /u01/app/grid/crs1830 -desthome /u01/app/grid/crs1840 -eval server2.cern.ch: Audit ID: 4 server2.cern.ch: Evaluation in progress for "move gihome" ... server2.cern.ch: verifying versions of Oracle homes ... server2.cern.ch: verifying owners of Oracle homes ... server2.cern.ch: verifying groups of Oracle homes ... server2.cern.ch: Evaluation finished successfully for "move gihome". |

My personal suggestion at least at your first experiences with the automaton, is to move the Oracle Home on one node at a time. This way, YOU control the relocation of the services and resources before doing the actual move operation.

Here is the execution for the first node:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 |

# [ oracle@server1:/u01/app/oracle/home [15:17:26] [18.3.0.0.0 [GRID] SID=GRID] 0 ] # $ rhpctl move gihome -sourcehome /u01/app/grid/crs1830 -desthome /u01/app/grid/crs1840 -node server1 server2.cern.ch: Audit ID: 4 server2.cern.ch: verifying versions of Oracle homes ... server2.cern.ch: verifying owners of Oracle homes ... server2.cern.ch: verifying groups of Oracle homes ... server2.cern.ch: starting to move the Oracle Grid Infrastructure home from "/u01/app/grid/crs1830" to "/u01/app/grid/crs1840" on server cluster "CRSTEST-RAC16" server2.cern.ch: Executing prepatch and postpatch on nodes: "server1". server2.cern.ch: Executing root script on nodes [server1]. server2.cern.ch: Successfully executed root script on nodes [server1]. server2.cern.ch: Executing root script on nodes [server1]. Using configuration parameter file: /u01/app/grid/crs1840/crs/install/crsconfig_params The log of current session can be found at: /u01/app/oracle/crsdata/server1/crsconfig/crs_postpatch_server1_2018-11-14_03-27-43PM.log Oracle Clusterware active version on the cluster is [18.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [70732493]. CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'server1' CRS-2673: Attempting to stop 'ora.crsd' on 'server1' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'server1' CRS-2673: Attempting to stop 'ora.LISTENER_SCAN2.lsnr' on 'server1' CRS-2673: Attempting to stop 'ora.mgmt.ghchkpt.acfs' on 'server1' CRS-2673: Attempting to stop 'ora.helper336.hlp' on 'server1' CRS-2673: Attempting to stop 'ora.chad' on 'server1' CRS-2673: Attempting to stop 'ora.chad' on 'server2' CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'server1' CRS-2673: Attempting to stop 'ora.OCRVOT.dg' on 'server1' CRS-2673: Attempting to stop 'ora.MGMT.dg' on 'server1' CRS-2673: Attempting to stop 'ora.helper' on 'server1' CRS-2673: Attempting to stop 'ora.cvu' on 'server1' CRS-2673: Attempting to stop 'ora.qosmserver' on 'server1' CRS-2677: Stop of 'ora.helper336.hlp' on 'server1' succeeded CRS-2677: Stop of 'ora.OCRVOT.dg' on 'server1' succeeded CRS-2677: Stop of 'ora.MGMT.dg' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'server1' CRS-2677: Stop of 'ora.LISTENER_SCAN2.lsnr' on 'server1' succeeded CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.scan2.vip' on 'server1' CRS-2677: Stop of 'ora.helper' on 'server1' succeeded CRS-2677: Stop of 'ora.cvu' on 'server1' succeeded CRS-2677: Stop of 'ora.scan2.vip' on 'server1' succeeded CRS-2677: Stop of 'ora.asm' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'server1' CRS-2677: Stop of 'ora.mgmt.ghchkpt.acfs' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.MGMT.GHCHKPT.advm' on 'server1' CRS-2677: Stop of 'ora.MGMT.GHCHKPT.advm' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.proxy_advm' on 'server1' CRS-2677: Stop of 'ora.chad' on 'server2' succeeded CRS-2677: Stop of 'ora.chad' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.mgmtdb' on 'server1' CRS-2677: Stop of 'ora.qosmserver' on 'server1' succeeded CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'server1' succeeded CRS-2677: Stop of 'ora.proxy_advm' on 'server1' succeeded CRS-2677: Stop of 'ora.mgmtdb' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.MGMTLSNR' on 'server1' CRS-2677: Stop of 'ora.MGMTLSNR' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.server1.vip' on 'server1' CRS-2677: Stop of 'ora.server1.vip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.MGMTLSNR' on 'server2' CRS-2672: Attempting to start 'ora.qosmserver' on 'server2' CRS-2672: Attempting to start 'ora.scan2.vip' on 'server2' CRS-2672: Attempting to start 'ora.cvu' on 'server2' CRS-2672: Attempting to start 'ora.server1.vip' on 'server2' CRS-2676: Start of 'ora.cvu' on 'server2' succeeded CRS-2676: Start of 'ora.server1.vip' on 'server2' succeeded CRS-2676: Start of 'ora.MGMTLSNR' on 'server2' succeeded CRS-2672: Attempting to start 'ora.mgmtdb' on 'server2' CRS-2676: Start of 'ora.scan2.vip' on 'server2' succeeded CRS-2672: Attempting to start 'ora.LISTENER_SCAN2.lsnr' on 'server2' CRS-2676: Start of 'ora.LISTENER_SCAN2.lsnr' on 'server2' succeeded CRS-2676: Start of 'ora.qosmserver' on 'server2' succeeded CRS-2676: Start of 'ora.mgmtdb' on 'server2' succeeded CRS-2672: Attempting to start 'ora.chad' on 'server2' CRS-2676: Start of 'ora.chad' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.ons' on 'server1' CRS-2677: Stop of 'ora.ons' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.net1.network' on 'server1' CRS-2677: Stop of 'ora.net1.network' on 'server1' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'server1' has completed CRS-2677: Stop of 'ora.crsd' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'server1' CRS-2673: Attempting to stop 'ora.crf' on 'server1' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'server1' CRS-2673: Attempting to stop 'ora.mdnsd' on 'server1' CRS-2677: Stop of 'ora.drivers.acfs' on 'server1' succeeded CRS-2677: Stop of 'ora.crf' on 'server1' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'server1' succeeded CRS-2677: Stop of 'ora.asm' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'server1' CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.ctssd' on 'server1' CRS-2673: Attempting to stop 'ora.evmd' on 'server1' CRS-2677: Stop of 'ora.ctssd' on 'server1' succeeded CRS-2677: Stop of 'ora.evmd' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'server1' CRS-2677: Stop of 'ora.cssd' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'server1' CRS-2673: Attempting to stop 'ora.gpnpd' on 'server1' CRS-2677: Stop of 'ora.gipcd' on 'server1' succeeded CRS-2677: Stop of 'ora.gpnpd' on 'server1' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'server1' has completed CRS-4133: Oracle High Availability Services has been stopped. 2018/11/14 15:30:10 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service' CRS-4123: Starting Oracle High Availability Services-managed resources CRS-2672: Attempting to start 'ora.mdnsd' on 'server1' CRS-2672: Attempting to start 'ora.evmd' on 'server1' CRS-2676: Start of 'ora.mdnsd' on 'server1' succeeded CRS-2676: Start of 'ora.evmd' on 'server1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'server1' CRS-2676: Start of 'ora.gpnpd' on 'server1' succeeded CRS-2672: Attempting to start 'ora.gipcd' on 'server1' CRS-2676: Start of 'ora.gipcd' on 'server1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'server1' CRS-2672: Attempting to start 'ora.crf' on 'server1' CRS-2676: Start of 'ora.cssdmonitor' on 'server1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'server1' CRS-2672: Attempting to start 'ora.diskmon' on 'server1' CRS-2676: Start of 'ora.diskmon' on 'server1' succeeded CRS-2676: Start of 'ora.crf' on 'server1' succeeded CRS-2676: Start of 'ora.cssd' on 'server1' succeeded CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'server1' CRS-2672: Attempting to start 'ora.ctssd' on 'server1' CRS-2676: Start of 'ora.ctssd' on 'server1' succeeded CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'server1' CRS-2676: Start of 'ora.asm' on 'server1' succeeded CRS-2672: Attempting to start 'ora.storage' on 'server1' CRS-2676: Start of 'ora.storage' on 'server1' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'server1' CRS-2676: Start of 'ora.crsd' on 'server1' succeeded CRS-6017: Processing resource auto-start for servers: server1 CRS-2673: Attempting to stop 'ora.server1.vip' on 'server2' CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'server2' CRS-2672: Attempting to start 'ora.ons' on 'server1' CRS-2672: Attempting to start 'ora.chad' on 'server1' CRS-2677: Stop of 'ora.server1.vip' on 'server2' succeeded CRS-2672: Attempting to start 'ora.server1.vip' on 'server1' CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.scan1.vip' on 'server2' CRS-2677: Stop of 'ora.scan1.vip' on 'server2' succeeded CRS-2672: Attempting to start 'ora.scan1.vip' on 'server1' CRS-2676: Start of 'ora.chad' on 'server1' succeeded CRS-2676: Start of 'ora.server1.vip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.LISTENER.lsnr' on 'server1' CRS-2676: Start of 'ora.scan1.vip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'server1' CRS-2676: Start of 'ora.LISTENER.lsnr' on 'server1' succeeded CRS-2679: Attempting to clean 'ora.asm' on 'server1' CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'server1' succeeded CRS-2681: Clean of 'ora.asm' on 'server1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'server1' CRS-2676: Start of 'ora.ons' on 'server1' succeeded ORA-15150: instance lock mode 'EXCLUSIVE' conflicts with other ASM instance(s) CRS-2674: Start of 'ora.asm' on 'server1' failed CRS-2672: Attempting to start 'ora.asm' on 'server1' ORA-15150: instance lock mode 'EXCLUSIVE' conflicts with other ASM instance(s) CRS-2674: Start of 'ora.asm' on 'server1' failed CRS-2679: Attempting to clean 'ora.proxy_advm' on 'server1' CRS-2681: Clean of 'ora.proxy_advm' on 'server1' succeeded CRS-2672: Attempting to start 'ora.proxy_advm' on 'server1' CRS-2676: Start of 'ora.proxy_advm' on 'server1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'server1' ORA-15150: instance lock mode 'EXCLUSIVE' conflicts with other ASM instance(s) CRS-2674: Start of 'ora.asm' on 'server1' failed CRS-2672: Attempting to start 'ora.MGMT.GHCHKPT.advm' on 'server1' CRS-2676: Start of 'ora.MGMT.GHCHKPT.advm' on 'server1' succeeded CRS-2672: Attempting to start 'ora.mgmt.ghchkpt.acfs' on 'server1' CRS-2676: Start of 'ora.mgmt.ghchkpt.acfs' on 'server1' succeeded ===== Summary of resource auto-start failures follows ===== CRS-2807: Resource 'ora.asm' failed to start automatically. CRS-6016: Resource auto-start has completed for server server1 CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources CRS-4123: Oracle High Availability Services has been started. Oracle Clusterware active version on the cluster is [18.0.0.0.0]. The cluster upgrade state is [ROLLING PATCH]. The cluster active patch level is [70732493]. 2018/11/14 15:35:23 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2018/11/14 15:37:11 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2018/11/14 15:37:13 CLSRSC-672: Post-patch steps for patching GI home successfully completed. server2.cern.ch: Successfully executed root script on nodes [server1]. server2.cern.ch: Updating inventory on nodes: server1. ======================================== server2.cern.ch: Starting Oracle Universal Installer... The inventory pointer is located at /etc/oraInst.loc 'UpdateNodeList' was successful. server2.cern.ch: Updated inventory on nodes: server1. server2.cern.ch: Updating inventory on nodes: server1. ======================================== server2.cern.ch: Starting Oracle Universal Installer... The inventory pointer is located at /etc/oraInst.loc 'UpdateNodeList' was successful. server2.cern.ch: Updated inventory on nodes: server1. server2.cern.ch: Continue by running 'rhpctl move gihome -destwc <workingcopy_name> -continue [-root | -sudouser <sudo_username> -sudopath <path_to_sudo_binary>]'. server2.cern.ch: completed the move of Oracle Grid Infrastructure home on server cluster "CRSTEST-RAC16" |

From this output you can see at line 15 that the cluster status is NORMAL, then the cluster is stopped on node 1 (lines 16 to 100), then the active version is modified in the oracle-ohasd.service file (line 101), then started back with the new version (lines 102 to 171). The cluster status now is ROLLING PATCH (line 172). The TFA and the node list are updated.

Before continuing with the other(s) node(s), make sure that all the resources are up & running:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

# [ oracle@server1:/u01/app/oracle/home [15:37:26] [18.3.0.0.0 [GRID] SID=GRID] 0 ] # $ crss HA Resource Targets States ----------- ----------------------------- ---------------------------------------- ora.ASMNET1LSNR_ASM.lsnr ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.LISTENER.lsnr ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.LISTENER_SCAN1.lsnr ONLINE ONLINE on server1 ora.LISTENER_SCAN2.lsnr ONLINE ONLINE on server2 ora.MGMT.GHCHKPT.advm ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.MGMT.dg ONLINE,ONLINE OFFLINE,ONLINE on server2 ora.MGMTLSNR ONLINE ONLINE on server2 ora.OCRVOT.dg OFFLINE,ONLINE OFFLINE,ONLINE on server2 ora.asm ONLINE,ONLINE,OFFLINE OFFLINE,ONLINE on server2,OFFLINE ora.chad ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.cvu ONLINE ONLINE on server2 ora.helper ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.helper336.hlp ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.server1.vip ONLINE ONLINE on server1 ora.server2.vip ONLINE ONLINE on server2 ora.mgmt.ghchkpt.acfs ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.mgmtdb ONLINE ONLINE on server2 ora.net1.network ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.ons ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.proxy_advm ONLINE,ONLINE ONLINE on server1,ONLINE on server2 ora.qosmserver ONLINE ONLINE on server2 ora.rhpserver ONLINE ONLINE on server2 ora.scan1.vip ONLINE ONLINE on server1 ora.LISTENER_LEAF.lsnr ora.scan2.vip ONLINE ONLINE on server2 # [ oracle@server1:/u01/app/oracle/home [15:52:10] [18.4.0.0.0 [GRID] SID=GRID] 1 ] # $ crsctl query crs releasepatch Oracle Clusterware release patch level is [59717688] and the complete list of patches [27908644 27923415 28090523 28090553 28090557 28256701 28547619 28655784 28655916 28655963 28656071 ] have been applied on the local node. The release patch string is [18.4.0.0.0]. |

You might want as well to relocate manually your resources back to node 1 prior to continuing on node 2.

After that, node 2 can follow the very same procedure:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 |

# [ oracle@server1:/u01/app/oracle/home [15:54:30] [18.4.0.0.0 [GRID] SID=GRID] 130 ] # $ rhpctl move gihome -sourcehome /u01/app/grid/crs1830 -desthome /u01/app/grid/crs1840 -node server2 server2.cern.ch: Audit ID: 51 server2.cern.ch: Executing prepatch and postpatch on nodes: "server2". server2.cern.ch: Executing root script on nodes [server2]. server2.cern.ch: Successfully executed root script on nodes [server2]. server2.cern.ch: Executing root script on nodes [server2]. Using configuration parameter file: /u01/app/grid/crs1840/crs/install/crsconfig_params The log of current session can be found at: /u01/app/oracle/crsdata/server2/crsconfig/crs_postpatch_server2_2018-11-14_03-58-21PM.log Oracle Clusterware active version on the cluster is [18.0.0.0.0]. The cluster upgrade state is [ROLLING PATCH]. The cluster active patch level is [70732493]. CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'server2' CRS-2673: Attempting to stop 'ora.crsd' on 'server2' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'server2' CRS-2673: Attempting to stop 'ora.LISTENER_SCAN2.lsnr' on 'server2' CRS-2673: Attempting to stop 'ora.cvu' on 'server2' CRS-2673: Attempting to stop 'ora.rhpserver' on 'server2' CRS-2673: Attempting to stop 'ora.OCRVOT.dg' on 'server2' CRS-2673: Attempting to stop 'ora.MGMT.dg' on 'server2' CRS-2673: Attempting to stop 'ora.qosmserver' on 'server2' CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'server2' CRS-2673: Attempting to stop 'ora.chad' on 'server1' CRS-2673: Attempting to stop 'ora.chad' on 'server2' CRS-2673: Attempting to stop 'ora.helper336.hlp' on 'server2' CRS-2673: Attempting to stop 'ora.helper' on 'server2' CRS-2677: Stop of 'ora.LISTENER_SCAN2.lsnr' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.scan2.vip' on 'server2' CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'server2' succeeded CRS-2677: Stop of 'ora.chad' on 'server1' succeeded CRS-2677: Stop of 'ora.chad' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.mgmtdb' on 'server2' CRS-2677: Stop of 'ora.OCRVOT.dg' on 'server2' succeeded CRS-2677: Stop of 'ora.MGMT.dg' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'server2' CRS-2677: Stop of 'ora.helper336.hlp' on 'server2' succeeded CRS-2677: Stop of 'ora.helper' on 'server2' succeeded CRS-2677: Stop of 'ora.scan2.vip' on 'server2' succeeded CRS-2677: Stop of 'ora.asm' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'server2' CRS-2677: Stop of 'ora.cvu' on 'server2' succeeded CRS-2677: Stop of 'ora.qosmserver' on 'server2' succeeded CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'server2' succeeded CRS-2677: Stop of 'ora.mgmtdb' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.MGMTLSNR' on 'server2' CRS-2677: Stop of 'ora.MGMTLSNR' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.server2.vip' on 'server2' CRS-2672: Attempting to start 'ora.MGMTLSNR' on 'server1' CRS-2677: Stop of 'ora.server2.vip' on 'server2' succeeded CRS-2676: Start of 'ora.MGMTLSNR' on 'server1' succeeded CRS-2672: Attempting to start 'ora.mgmtdb' on 'server1' CRS-2676: Start of 'ora.mgmtdb' on 'server1' succeeded CRS-2672: Attempting to start 'ora.chad' on 'server1' CRS-2676: Start of 'ora.chad' on 'server1' succeeded Stop JWC CRS-5014: Agent "ORAROOTAGENT" timed out starting process "/u01/app/grid/crs1830/bin/ghappctl" for action "stop": details at "(:CLSN00009:)" in "/u01/app/oracle/diag/crs/server2/crs/trace/crsd_orarootagent_root.trc" CRS-2675: Stop of 'ora.rhpserver' on 'server2' failed CRS-2679: Attempting to clean 'ora.rhpserver' on 'server2' CRS-2681: Clean of 'ora.rhpserver' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.mgmt.ghchkpt.acfs' on 'server2' CRS-2677: Stop of 'ora.mgmt.ghchkpt.acfs' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.MGMT.GHCHKPT.advm' on 'server2' CRS-2677: Stop of 'ora.MGMT.GHCHKPT.advm' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.proxy_advm' on 'server2' CRS-2677: Stop of 'ora.proxy_advm' on 'server2' succeeded CRS-2672: Attempting to start 'ora.qosmserver' on 'server1' CRS-2672: Attempting to start 'ora.scan2.vip' on 'server1' CRS-2672: Attempting to start 'ora.cvu' on 'server1' CRS-2672: Attempting to start 'ora.server2.vip' on 'server1' CRS-2676: Start of 'ora.cvu' on 'server1' succeeded CRS-2676: Start of 'ora.server2.vip' on 'server1' succeeded CRS-2676: Start of 'ora.scan2.vip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.LISTENER_SCAN2.lsnr' on 'server1' CRS-2676: Start of 'ora.LISTENER_SCAN2.lsnr' on 'server1' succeeded CRS-2676: Start of 'ora.qosmserver' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.ons' on 'server2' CRS-2677: Stop of 'ora.ons' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.net1.network' on 'server2' CRS-2677: Stop of 'ora.net1.network' on 'server2' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'server2' has completed CRS-2677: Stop of 'ora.crsd' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'server2' CRS-2673: Attempting to stop 'ora.crf' on 'server2' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'server2' CRS-2673: Attempting to stop 'ora.mdnsd' on 'server2' CRS-2677: Stop of 'ora.drivers.acfs' on 'server2' succeeded CRS-2677: Stop of 'ora.crf' on 'server2' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'server2' succeeded CRS-2677: Stop of 'ora.asm' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'server2' CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.ctssd' on 'server2' CRS-2673: Attempting to stop 'ora.evmd' on 'server2' CRS-2677: Stop of 'ora.ctssd' on 'server2' succeeded CRS-2677: Stop of 'ora.evmd' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'server2' CRS-2677: Stop of 'ora.cssd' on 'server2' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'server2' CRS-2673: Attempting to stop 'ora.gpnpd' on 'server2' CRS-2677: Stop of 'ora.gpnpd' on 'server2' succeeded CRS-2677: Stop of 'ora.gipcd' on 'server2' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'server2' has completed CRS-4133: Oracle High Availability Services has been stopped. 2018/11/14 16:01:42 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service' CRS-4123: Starting Oracle High Availability Services-managed resources CRS-2672: Attempting to start 'ora.mdnsd' on 'server2' CRS-2672: Attempting to start 'ora.evmd' on 'server2' CRS-2676: Start of 'ora.mdnsd' on 'server2' succeeded CRS-2676: Start of 'ora.evmd' on 'server2' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'server2' CRS-2676: Start of 'ora.gpnpd' on 'server2' succeeded CRS-2672: Attempting to start 'ora.gipcd' on 'server2' CRS-2676: Start of 'ora.gipcd' on 'server2' succeeded CRS-2672: Attempting to start 'ora.crf' on 'server2' CRS-2672: Attempting to start 'ora.cssdmonitor' on 'server2' CRS-2676: Start of 'ora.cssdmonitor' on 'server2' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'server2' CRS-2672: Attempting to start 'ora.diskmon' on 'server2' CRS-2676: Start of 'ora.diskmon' on 'server2' succeeded CRS-2676: Start of 'ora.crf' on 'server2' succeeded CRS-2676: Start of 'ora.cssd' on 'server2' succeeded CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'server2' CRS-2672: Attempting to start 'ora.ctssd' on 'server2' CRS-2676: Start of 'ora.ctssd' on 'server2' succeeded CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'server2' succeeded CRS-2672: Attempting to start 'ora.asm' on 'server2' CRS-2676: Start of 'ora.asm' on 'server2' succeeded CRS-2672: Attempting to start 'ora.storage' on 'server2' CRS-2676: Start of 'ora.storage' on 'server2' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'server2' CRS-2676: Start of 'ora.crsd' on 'server2' succeeded CRS-6017: Processing resource auto-start for servers: server2 CRS-2673: Attempting to stop 'ora.server2.vip' on 'server1' CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'server1' CRS-2672: Attempting to start 'ora.ons' on 'server2' CRS-2672: Attempting to start 'ora.chad' on 'server2' CRS-2677: Stop of 'ora.server2.vip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.server2.vip' on 'server2' CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'server1' succeeded CRS-2673: Attempting to stop 'ora.scan1.vip' on 'server1' CRS-2677: Stop of 'ora.scan1.vip' on 'server1' succeeded CRS-2672: Attempting to start 'ora.scan1.vip' on 'server2' CRS-2676: Start of 'ora.server2.vip' on 'server2' succeeded CRS-2672: Attempting to start 'ora.LISTENER.lsnr' on 'server2' CRS-2676: Start of 'ora.chad' on 'server2' succeeded CRS-2676: Start of 'ora.scan1.vip' on 'server2' succeeded CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'server2' CRS-2676: Start of 'ora.LISTENER.lsnr' on 'server2' succeeded CRS-2679: Attempting to clean 'ora.asm' on 'server2' CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'server2' succeeded CRS-2681: Clean of 'ora.asm' on 'server2' succeeded CRS-2672: Attempting to start 'ora.asm' on 'server2' CRS-2676: Start of 'ora.ons' on 'server2' succeeded ORA-15150: instance lock mode 'EXCLUSIVE' conflicts with other ASM instance(s) CRS-2674: Start of 'ora.asm' on 'server2' failed CRS-2672: Attempting to start 'ora.asm' on 'server2' ORA-15150: instance lock mode 'EXCLUSIVE' conflicts with other ASM instance(s) CRS-2674: Start of 'ora.asm' on 'server2' failed CRS-2679: Attempting to clean 'ora.proxy_advm' on 'server2' CRS-2681: Clean of 'ora.proxy_advm' on 'server2' succeeded CRS-2672: Attempting to start 'ora.proxy_advm' on 'server2' CRS-2676: Start of 'ora.proxy_advm' on 'server2' succeeded CRS-2672: Attempting to start 'ora.asm' on 'server2' ORA-15150: instance lock mode 'EXCLUSIVE' conflicts with other ASM instance(s) CRS-2674: Start of 'ora.asm' on 'server2' failed CRS-2672: Attempting to start 'ora.MGMT.GHCHKPT.advm' on 'server2' CRS-2676: Start of 'ora.MGMT.GHCHKPT.advm' on 'server2' succeeded CRS-2672: Attempting to start 'ora.mgmt.ghchkpt.acfs' on 'server2' CRS-2676: Start of 'ora.mgmt.ghchkpt.acfs' on 'server2' succeeded ===== Summary of resource auto-start failures follows ===== CRS-2807: Resource 'ora.asm' failed to start automatically. CRS-6016: Resource auto-start has completed for server server2 CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources CRS-4123: Oracle High Availability Services has been started. Oracle Clusterware active version on the cluster is [18.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [59717688]. SQL Patching tool version 18.0.0.0.0 Production on Wed Nov 14 16:09:01 2018 Copyright (c) 2012, 2018, Oracle. All rights reserved. Log file for this invocation: /u01/app/oracle/cfgtoollogs/sqlpatch/sqlpatch_181222_2018_11_14_16_09_01/sqlpatch_invocation.log Connecting to database...OK Gathering database info...done Note: Datapatch will only apply or rollback SQL fixes for PDBs that are in an open state, no patches will be applied to closed PDBs. Please refer to Note: Datapatch: Database 12c Post Patch SQL Automation (Doc ID 1585822.1) Bootstrapping registry and package to current versions...done Determining current state...done Current state of interim SQL patches: Interim patch 27923415 (OJVM RELEASE UPDATE: 18.3.0.0.180717 (27923415)): Binary registry: Installed PDB CDB$ROOT: Applied successfully on 13-NOV-18 04.35.06.794463 PM PDB GIMR_DSCREP_10: Applied successfully on 13-NOV-18 04.43.16.948526 PM PDB PDB$SEED: Applied successfully on 13-NOV-18 04.43.16.948526 PM Current state of release update SQL patches: Binary registry: 18.4.0.0.0 Release_Update 1809251743: Installed PDB CDB$ROOT: Applied 18.3.0.0.0 Release_Update 1806280943 successfully on 13-NOV-18 04.35.06.791214 PM PDB GIMR_DSCREP_10: Applied 18.3.0.0.0 Release_Update 1806280943 successfully on 13-NOV-18 04.43.16.940471 PM PDB PDB$SEED: Applied 18.3.0.0.0 Release_Update 1806280943 successfully on 13-NOV-18 04.43.16.940471 PM Adding patches to installation queue and performing prereq checks...done Installation queue: For the following PDBs: CDB$ROOT PDB$SEED GIMR_DSCREP_10 No interim patches need to be rolled back Patch 28655784 (Database Release Update : 18.4.0.0.181016 (28655784)): Apply from 18.3.0.0.0 Release_Update 1806280943 to 18.4.0.0.0 Release_Update 1809251743 No interim patches need to be applied Installing patches... Patch installation complete. Total patches installed: 3 Validating logfiles...done Patch 28655784 apply (pdb CDB$ROOT): SUCCESS logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/28655784/22509982/28655784_apply__MGMTDB_CDBROOT_2018Nov14_16_11_00.log (no errors) Patch 28655784 apply (pdb PDB$SEED): SUCCESS logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/28655784/22509982/28655784_apply__MGMTDB_PDBSEED_2018Nov14_16_11_51.log (no errors) Patch 28655784 apply (pdb GIMR_DSCREP_10): SUCCESS logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/28655784/22509982/28655784_apply__MGMTDB_GIMR_DSCREP_10_2018Nov14_16_11_50.log (no errors) SQL Patching tool complete on Wed Nov 14 16:12:50 2018 2018/11/14 16:13:40 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2018/11/14 16:15:28 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2018/11/14 16:17:48 CLSRSC-672: Post-patch steps for patching GI home successfully completed. server2.cern.ch: Updating inventory on nodes: server2. ======================================== server2.cern.ch: Starting Oracle Universal Installer... Checking swap space: must be greater than 500 MB. Actual 16367 MB Passed The inventory pointer is located at /etc/oraInst.loc 'UpdateNodeList' was successful. server2.cern.ch: Updated inventory on nodes: server2. server2.cern.ch: Updating inventory on nodes: server2. ======================================== server2.cern.ch: Starting Oracle Universal Installer... Checking swap space: must be greater than 500 MB. Actual 16367 MB Passed The inventory pointer is located at /etc/oraInst.loc 'UpdateNodeList' was successful. server2.cern.ch: Updated inventory on nodes: server2. server2.cern.ch: Completed the 'move gihome' operation on server cluster. |

As you can see, there are two differencse here: the second node was in this case the last one, so the cluster status gets back to NORMAL, and the GIMR is patched with datapatch (lines 176-227).

At this point, the cluster has been patched. After some testing, you can safely remove the inactive version of Grid Infrastructure using the deinstall binary ($OLD_OH/deinstall/deinstall).

Quite easy, huh?

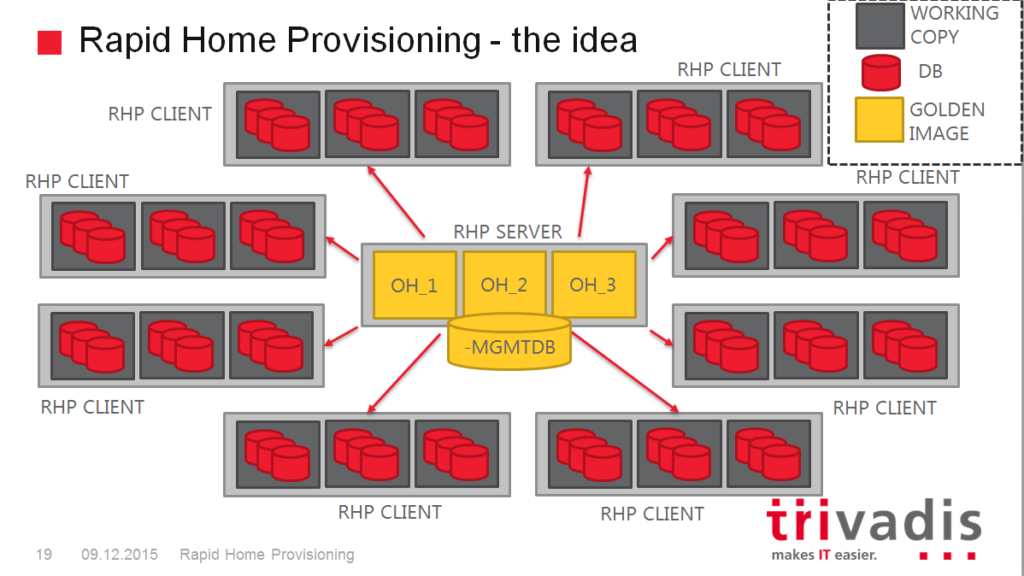

If you combine the Independent Local-mode Automaton with a home-developed solution for the creation and the provisioning of Grid Infrastructure Golden Images, you can easily achieve automated Grid Infrastructure patching of a big, multi-cluster environment.

Of course, Fleet Patching and Provisioning remains the Rolls-Royce: if you can afford it, GI patching and much more is completely automated and developed by Oracle, so you will have no headaches when new versions are released. But the local-mode automaton might be enough for your needs.

—

Ludo