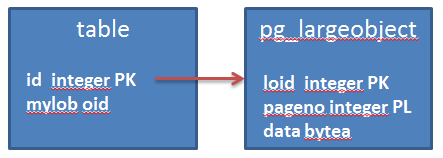

In my previous post I showed how large objects use space inside the table pg_largeobject when inserted.

Let’s see something more:

The table had 2 large objects (for a total of 1024 records):

|

1 2 3 4 5 |

lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 1441792 (1 row) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

lob_test=# \lo_import '/tmp/randoms'; lo_import 16493 lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 2842624 (1 row) lob_test=# select oid, * from pg_largeobject_metadata; oid | lomowner | lomacl -------+----------+-------- 16491 | 10 | 16492 | 10 | 16493 | 10 | (3 rows) |

As expected, because a random sequence of characters cannot be compressed, the size increased again by 171 blocks (see my previous post for the explanation)

If you read this nice series of blog posts by Frits Hoogland, you should know about the pageinspect extension and the t_infomask 16-bit mask.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

lob_test=# select * from page_header(get_raw_page('pg_largeobject',0)); lsn | checksum | flags | lower | upper | special | pagesize | version | prune_xid -------------+----------+-------+-------+-------+---------+----------+---------+----------- 18/38004C10 | 0 | 0 | 452 | 488 | 8192 | 8192 | 4 | 0 (1 row) -- same result (lower 452, upper 488) for blocks 1...3 lob_test=# select * from page_header(get_raw_page('pg_largeobject',4)); lsn | checksum | flags | lower | upper | special | pagesize | version | prune_xid -------------+----------+-------+-------+-------+---------+----------+---------+----------- 18/380179F8 | 0 | 0 | 360 | 2144 | 8192 | 8192 | 4 | 0 (1 row) lob_test=# select * from page_header(get_raw_page('pg_largeobject',5)); lsn | checksum | flags | lower | upper | special | pagesize | version | prune_xid -------------+----------+-------+-------+-------+---------+----------+---------+----------- 18/381386E0 | 0 | 0 | 36 | 1928 | 8192 | 8192 | 4 | 0 (1 row)-- same result for the remaining blocks |

We already know the mathematics, but we love having all the pieces come together 🙂

We know that: The page header is 24 bytes, and that the line pointers use 4 bytes for each tuple.

The first 4 pages have the lower offset to 452 bytes means that we have (452-24)/4 = 107 tuples.

The 5th page (page number 4) has the lower to 360: (360-24)/4=84 tuples.

The remaining pages have the lower to 36: (36-24)/4 = 3 tuples.

Let’s check if we are right:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

lob_test=# select generate_series as page, (select count(*) from heap_page_items(get_raw_page('pg_largeobject',generate_series))) as tuples from generate_series(0,5); page | tuples ------+-------- 0 | 107 1 | 107 2 | 107 3 | 107 4 | 84 5 | 3 (6 rows) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

lob_test=# \lo_unlink 16492 lo_unlink 16492 lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 2842624 (1 row) lob_test=# select oid, * from pg_largeobject_metadata; oid | lomowner | lomacl -------+----------+-------- 16491 | 10 | 16493 | 10 | (2 rows) lob_test=# select generate_series as pageno, (select count(*) from heap_page_items(get_raw_page('pg_largeobject',generate_series)) ) from generate_series(0,12); pageno | count --------+------- 0 | 107 1 | 107 2 | 107 3 | 107 4 | 84 5 | 3 6 | 3 7 | 3 8 | 3 9 | 3 10 | 3 11 | 3 12 | 3 |

The space is still used and the tuples are still there.

However, we can check that the tuples are no longer used by checking the validity of their t_xmax. In fact, according to the documentation, if the XMAX is invalid the row is at the latest version:

[…] a tuple is the latest version of its row iff XMAX is invalid or t_ctid points to itself (in which case, if XMAX is valid, the tuple is either locked or deleted). […]

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

lob_test=# select generate_series as pageno, (select count(*) from heap_page_items(get_raw_page('pg_largeobject',generate_series)) where t_infomask::bit(16) & x'0800'::bit(16) = x'0800'::bit(16)) from generate_series(0,12); pageno | count --------+------- 0 | 107 1 | 107 2 | 107 3 | 107 4 | 84 5 | 0 6 | 0 7 | 0 8 | 0 9 | 0 10 | 0 11 | 0 12 | 0 |

Here we go. The large objects are split in compressed chunks that internally behave the same way as regular rows!

If we import another lob we will see that the space is not reused:

|

1 2 3 4 5 6 7 |

lob_test=# \lo_import '/tmp/randoms'; lo_import 16520 lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 4235264 (1 row) |

Flagging the tuples as reusable is the vacuum’s job:

|

1 2 3 4 5 6 7 8 |

lob_test=# vacuum pg_largeobject; VACUUM lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 4235264 (1 row) |

The normal vacuum does not release the empty space, but it can be reused now:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

lob_test=# select generate_series as pageno, (select count(*) from heap_page_items(get_raw_page('pg_largeobject',generate_series)) where t_infomask::bit(16) & x'0800'::bit(16) = x'0800'::bit(16)) from generate_series(0,12); pageno | count --------+------- 0 | 107 1 | 107 2 | 107 3 | 107 4 | 84 5 | 0 6 | 0 7 | 0 8 | 0 9 | 0 10 | 0 11 | 0 12 | 0 lob_test=# \lo_import '/tmp/randoms'; lo_import 16521 lob_test=# lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 4235264 (1 row) -- same size as before! lob_test=# select generate_series as pageno, (select count(*) from heap_page_items(get_raw_page('pg_largeobject',generate_series)) where t_infomask::bit(16) & x'0800'::bit(16) = x'0800'::bit(16)) from generate_series(0,12); pageno | count --------+------- 0 | 107 1 | 107 2 | 107 3 | 107 4 | 84 5 | 3 6 | 3 7 | 3 8 | 3 9 | 3 10 | 3 11 | 3 12 | 3 |

If we unlink the lob again and we do a vacuum full, the empty space is released:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

lob_test=# \lo_unlink 16521 lo_unlink 16521 lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 4235264 (1 row) lob_test=# vacuum full pg_largeobject; VACUUM lob_test=# select pg_relation_size('pg_largeobject'); pg_relation_size ------------------ 2842624 (1 row) |