This post is part of a blog series.

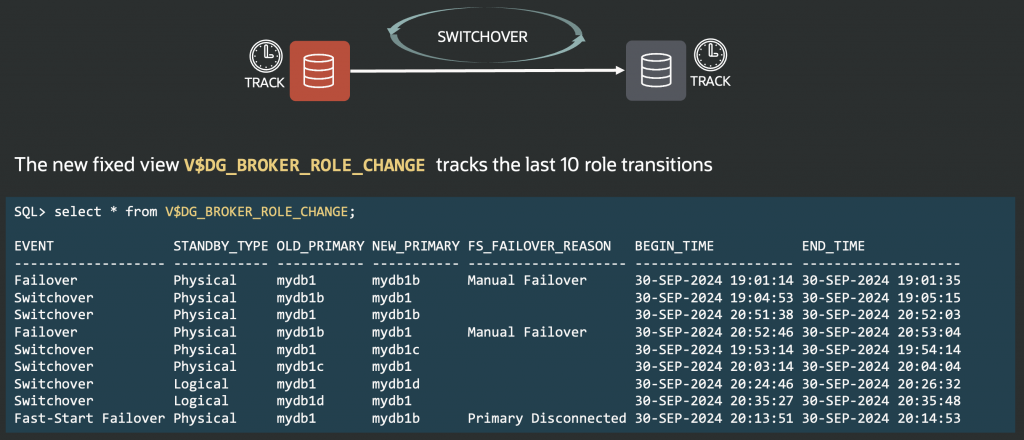

Data Guard 26ai introduces a new fixed view, V$DG_BROKER_ROLE_CHANGE, which tracks the last ten role transitions for a configuration. This view gathers information dynamically from the broker configuration file, logging both automatic and manual failovers (with reasons), switchovers, and each operation’s start and end times. We plan to add recovery point SCN details in a future Release Update, but this isn’t available yet.

This view makes it easy to see recent role changes without searching through alert logs. It also helps identify what was the primary database at any given moment. The view stores only the ten most recent role changes; to keep a longer history, you can copy its records into a regular table; for example, using a STARTUP trigger on the primary database.